Design Concept

Ancient manuscripts are fascinating objects that often hold great historical and spiritual significance. Our access to these objects is mediated through several barriers. First, there is the physical barrier: because of their value and fragility, these objects, if they are presented to the public at all, are usually encased in glass and untouchable. Then there are the barriers of foreign language, old writing styles and material condition that make it difficult to interpret the text.

This is where the idea of the interactive scroll comes from – a device that allows the user to physically interact with a variety of ancient manuscripts.

The user interfacing object is a blank scroll that can be rolled using rods at the sides. The scroll is placed on a clear acrylic surface. Text is projected from under the scroll, in Hebrew – from ancient bible manuscripts. As the user rolls the rods, the scroll advances, and the projected text moves with it. This works in reverse as well, using the other rod. There is a reading frame next to the table. When it is placed on the paper, a translation of the text into English appears in the frame. Additional layers of content include a progress bar indicating the current position in the scroll and some background information on the texts and their sources.

The installation uses rotary encoders and an Arduino microcontroller to translate the physical rotation of the scroll rods to the movement of the projected text, and a computer vision algorithm in Processing to track the reader and project the translation in the appropriate position.

Initial project concept blog post

Main Challenges

Fabrication

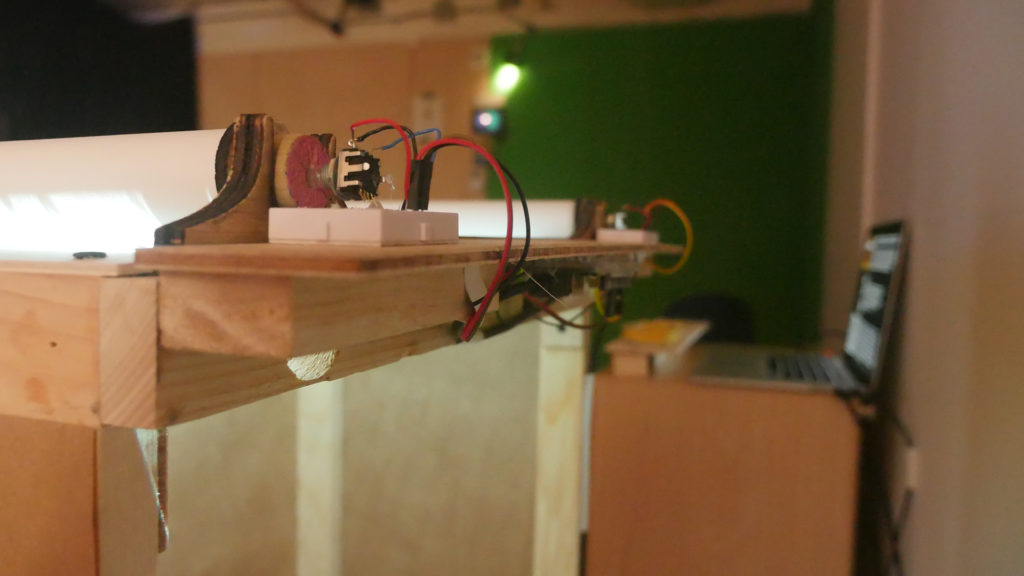

One of the more difficult parts was the connection between the wooden construction and the electronics. I experimented with a wheel encoder , which would have allowed for the sensor to be separated from the scrolling rods. But it seems wheel encoders, at least the ones I could find, are only able to measure rotational speed and not direction. So, I went back to the good ol’ rotary encoder. By drilling into the back of the rods and gluing the knobs of the encoders inside, then attaching the sensor to the top of a small breadboard with hot glue, I got stable readings and the mechanism has survived multiple users and moves.

The other tricky element was constructing the reader object. I wanted the frame to be as small as possible, but it had to contain four IR LEDs, a battery and all the accompanying circuitry. the first attempt at soldering all this mess together was a dud. I then decided I could make do with just two IR LEDs at opposing corners, which would provide the minimum data to track the corners of the reader frame. This meant more room and a lot less soldering points. The final circuit consisted of a switched battery holder with one 3V batteries, and two IR LEDs each connected through a resistor to power and to ground.

Serial communication

During the development phase I kept each function in a separate sketch, and each part worked well on its own. When I unified all of the code into one sketch, the serial data worked poorly – only detecting movement in direction, and at some point detecting movement when no physical movement was happening and no serial data was being sent from the Arduino. I tried using the serial call-response method but could not get it to work. However, simply moving the serial data reading code out of the draw loop and placing it in the “serialEvent()” function gave a stable and consistent result. The sketch should only process serial data when a carriage return is sent, not on every frame. Apparently though, this problem only becomes noticeable when other tasks like pixel analysis create a larger processing load.

Computer Vision

My initial setup was a bright-colored object used as the reading frame, with the camera set up above the table. I used the openCV library for Processing which includes a sketch that does tracking based on hue. Since the text projection is close to black and white, color tracking should have been easy. But the camera view of the projection produces bands of all colors and those threw off the color tracking (using a camera with manual exposure settings might have solved that, but would have required more equipment such as a video capture device).

At the same time I realized that the camera should be placed underneath so that users could lean above the table without blocking the camera. Then it became clear that the object being tracked would have to be seen through the paper, i.e. a light source.

The next setup was promising at first. Using an LED magnifier and changing the code to track a brightness range instead of a hue range, I was able to achieve good tracking (see video below).

However the tracking quality wasn’t stable, and moving the installation to a different room for play testing produced weaker results. At that point I decided to create my own object for the reader and install Infrared LEDs in it, solving any color artifact or accidental light issues.

Ultimately I took out the openCV code and used a more basic brightness tracking algrorithm, which was enough for the requirements of what I was doing.

User Experience

After the second round of user testing I saw that people had some issues with using the scroll rods in the correct direction, but that mostly they could pick that up on their own. However, they weren’t exploring the entire length of the scroll, perhaps because they didn’t know how much of it there was. I then added arrows on the wheels attached to the rods (which are part of the traditional design of the torah scroll) – these didn’t prove to be very useful during the winter show, due probably to the dark lighting in the space and the arrows being at a bad angle – the user looks at the object from the top, not from the front. I also added a progress bar at the top of the projection, which shows the total image of the scroll and the current position, as well as some background information. Still, most people did not bother scrolling more than a few turns to either side, and seemed to be satisfied with the effects of the interaction, more than they were interested in the content it provided.

Where to go next

I’m happy with my technical achievements in the project, and indeed most of my focus was on the technical elements and the interface design, and less on the actual content. It’s a good prototype for an educational tool, bringing a sense of magic and self-exploration to not-so-accessible subject matter. Thinking about how to turn it into a truly engaging interactive experience, I need to:

– find the stories I want to tell – from biblical source material or elsewhere

– understand what forms of content I need – more textual layers? illustration? moving images?

Posted in Fall '19 - Introduction to Physical Computation |