with Erkin Salmorbekov

The ghost of 370 Jay

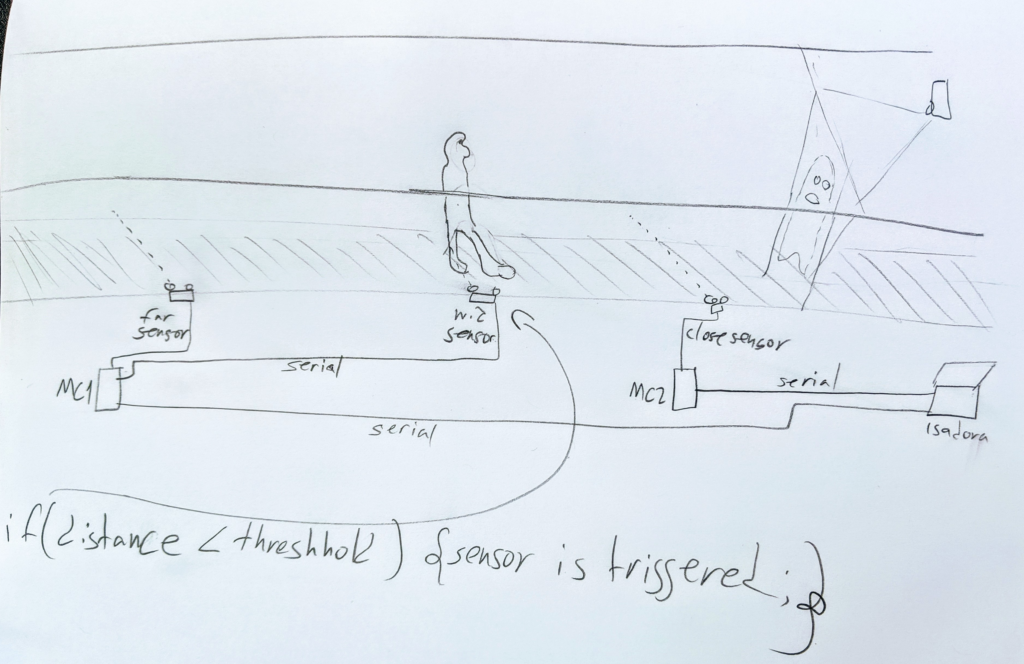

A haunted house installation designed for a long, narrow corridor.

Projected image and sound respond to a user approaching closer to the screen upon which the image is projected.

The behavior we want to detect:

A user is at some initial distance from the image; a user is halfway towards the image; a user is very close to the image.

The sensor array consists of three ultrasonic range sensors, set at the above distances. Each sensor is measuring the distance across the width of the corridor, and sends a trigger when the distance is below a certain margin, indicating that a person has passed through.

The sensors input into Arduinos which send three separate variables over serial connection to Isadora.

A projector placed behind the screen projects prerecorded video of a ghost-like character.

The different distance triggers will cue video and sound loops (the character calling the user to come closer, and then some surprise event when the user has reached the screen).

Process

In an attempt to simplify our setup, we tried using a single range sensor that would trigger the different events based on the distance measured from the projection surface (the end of the user’s journey). This raised two main issues:

1. The maximum effective measuring distance of the HC-SR04, which in our tests was around 2.5 meters, doesn’t afford enough space to create a narratively meaningful series of interactions between the user and the projection.

2. Pointing the sensor at an open space introduced many more errors due to air fluctuation and objects in the way. Pointing at a wall around 2 meters away from the sensor gave a more stable environment.

Once it was clear that the setup required multiple separate sensors at distances up to 15′, there were two possible layouts:

1. connect each sensor to a local Arduino and run three separate serial connections to the computer

2. Use one Arduino at one location, provide local power to the other two sensors and run a pair of copper wires (for trig and echo) between each sensor and the main Arduino.

While it may seem simpler/cheaper to make a few long runs of copper wire rather than getting multiple USB extension cables and Arduinos, option 2 is not the recommended route. Trying to utilize the existing equipment we had, we chose a midway solution (2 Arduinos and one “remote” sensor), and though our initial testing with long copper wires was successful, there were complications down the line.

Arduino code for Microcontroller 1 (far and mid sensor)

Arduino code for microcontroller 2 (close sensor)

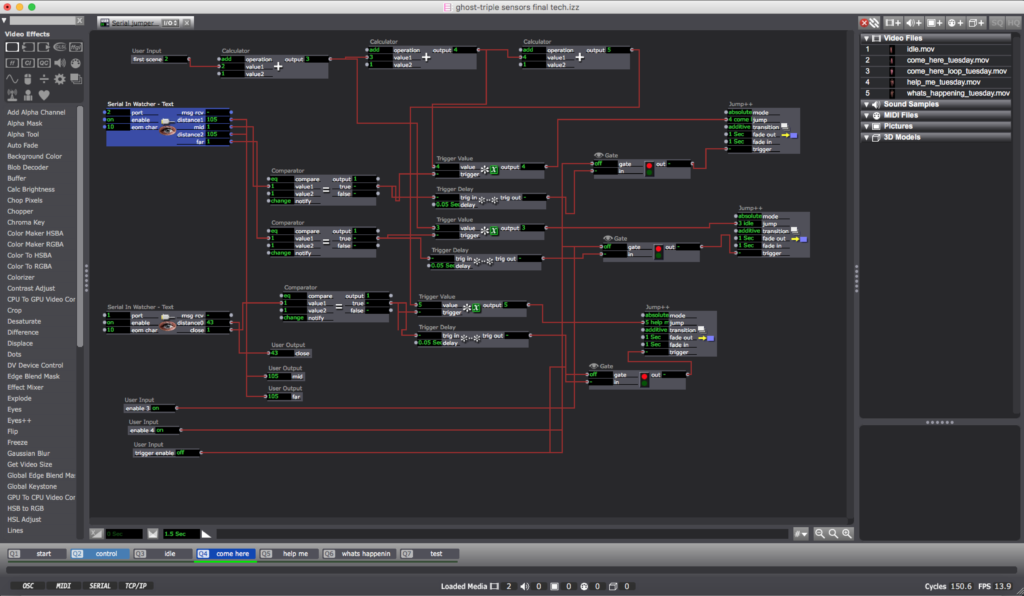

Isadora programming – the screenshot below shows the actor that receives serial data and cues the appropriate scene in the patch, based on which distance sensor was triggered.

The following code is put inside the “serial in watcher” actor, in order to parse the serial data sent by the Arduino:

distance1:int=4 digits ‘,’ mid:int=1 digits ‘,’ distance2:int=4digits ‘,’ far:int=1 digits

Passing the raw distance values into Isadora allowed us during testing to project the distance on the screen to get a sense of how the sensors were working, and help us calibrate and troubleshoot the system.

Demo

Challenges

This being a site-specific project, it soon became clear that all of the testing and calibration of the sensors had to be done in the actual space. Sensors had to be placed with enough distance between them to avoid getting the ultrasonic signals mixed.

Ultimately we got the system to work as expected about 75% of the time, in other cases it would fire the wrong scene (jumping ahead to “help me” instead of “come here”). We weren’t able to isolate whether the problem was with the sensors or with the programming in Isadora. I would like to try a fully code-based implementation in future projects, and see whether that can enable better debugging.

User behavior/feedback

Users responded differently to the installation. Some proceeded cautiously, giving enough time for each video beat to register, while others sped through. Once users noticed the sensors, or surmised their presence, the interaction lost its implicit nature and its surprise element.

How to design the interaction so that the viewer goes through the narrative and emotional process we created? More thought should be given to the details of the video itself – text, body language, as well as to having more control over the space, transforming it into an environment for the experience rather than a path to walk through.

Posted in Fall '19 - Introduction to Physical Computation |